Feed aggregator

How to update a user defined database package in production

to_char a big number insert into database become scientific notation

Explain plan estimate vs actual

Learning Azure by having fun with ChatGPT

Are you studying for an Azure certification? You may feel at some point you need a break from the dry and factual technical information. I’m in your shoes right now and I have done some experiments with ChatGPT. I’m not using it to write my text but to learn by having fun! It is very good at telling stories so why not use that for what interests me right now? Azure architecture!

In this blog post I’m going to show you how I use it, it may give you some ideas to make your learning a little more spicy!

Prompt for preparing ChatGPT with Azure

Prompt for preparing ChatGPT with Azure

First you’ll need to prepare ChatGPT by explaining what you want. Set the framework and limit its responses otherwise it is too chatty. As I like science fiction (sci-fi), I’m interested in a futuristic story but you could choose any style you like. My first prompt and ChatGPT response is shown below:

Act as an Azure architect. You are in charge of educating me, your student, to become an Azure architect too. You will respond to my questions by using story telling to explain each concepts requested.

I want you to:

– Always tell a simple and futuristic story

– Limit the reply to 100 words

As an Azure architect, imagine Azure as a vast cosmic city. Each service is a towering skyscraper, and we, architects, design the blueprints. Let’s start with Virtual Networks. They’re like the city’s infrastructure, connecting buildings. Subnets divide areas within each building, like floors. Next, VMs are the inhabitants, residing in these skyscrapers, each serving a unique purpose. Azure Storage, a network of secure vaults, safeguards their belongings. With Azure Load Balancer as traffic controllers, the city thrives, ensuring smooth navigation. As architects of this digital metropolis, we sculpt a future where innovation soars amidst the azure skyline.

Not bad! It gives me some key components of an Azure infrastructure and makes some analogies I can easily picture in my head. With this basis, I can now be the hero of this story by asking more questions.

Build up that Azure cosmic city with ChatGPTYou can then ask questions around your area of study (mine is Azure architecture) and stay immersed into that story. For example:

What are the best practice to govern this Azure cosmic city?

In our cosmic city, governance is paramount. Azure Policy acts as the city’s constitution, ensuring compliance with regulations. Role-Based Access Control (RBAC) assigns roles to citizens, granting appropriate permissions. Azure Resource Manager templates are like city blueprints, ensuring consistency in construction. Tags label buildings for easy identification, while Azure Monitor keeps a watchful eye on city activities. With these best practices, our cosmic city thrives, ensuring order, security, and harmony amidst the azure skyline.It gives you the key elements of Azure governance by continuing its story. You can go on like this back and forth by throwing in any chapter of your course. You can focus on a specific use case as well and see how it integrates with this story:

How can I migrate my SQL Server database on-premises to this city?

Imagine your SQL Server database as a cozy cottage in the countryside. To migrate to our cosmic city, Azure, we’ll use Azure Database Migration Service, like a futuristic moving company. It carefully transports your data to Azure SQL Database, a sleek skyscraper in our city. With minimal downtime, your database seamlessly transitions to its new home, ready to thrive amidst the azure skyline. Just sit back, relax, and watch as your data becomes part of the bustling digital metropolis.How do I ensure my database is highly resilient in this cosmic city?

In our cosmic city, resilience is key. Azure offers a robust solution: Azure SQL Database with built-in redundancy, akin to a fortress with multiple layers of defense. Automatic backups act as a safety net, ensuring data is never lost. Geo-replication creates duplicates across regions, safeguarding against disasters. With Azure SQL Database’s high availability and disaster recovery features, your database thrives amidst the azure skyline, resilient against any digital storm.I don’t know if it works for you but I find it fascinating I can make ChatGPT talk endlessly and respond to any questions I have about Azure in a sci-fi story!

Dive into an Azure componentAs usual with ChatGPT you need to drive the discussion to get the most of it. Let’s see what it has to say about Azure AD (I use the legacy terminology as ChatGPT v3.5 doesn’t know yet Microsoft Entra ID):

What and where would be the Azure AD in this city?

In our bustling city, Azure AD is the central hub, akin to City Hall. It’s where identities are registered, like citizens in our digital society. Located at the heart of the city, Azure AD ensures secure access to every digital service and resource, much like City Hall grants access to essential services. From authentication to authorization, Azure AD governs the city’s digital identities, ensuring a seamless and secure experience for all inhabitants amidst the azure skyline.How would Azure AD conditional access be implemented in this city?

In our city, Azure AD Conditional Access acts as the city’s security checkpoints. Picture futuristic gates at key entry points, only granting access to authorized individuals based on specific conditions. Want to enter the financial district skyscraper? You’ll need more than just a pass; perhaps a biometric scan during business hours. Conditional Access ensures only the right people, with the right permissions, access sensitive areas of our digital city. It’s our shield against cyber threats amidst the azure skyline, ensuring a safe and secure digital environment for all inhabitants.How would I connect from my Active Directory in the countryside to this Azure AD in the cloud?

In the countryside, your Active Directory (AD) is like a quaint village square, bustling with local identities. To connect to Azure AD in our cosmic cloud city, envision a digital bridge spanning the distance between village and skyline. Azure AD Connect is your trusty guide, seamlessly synchronizing identities from countryside AD to Azure AD. With this bridge in place, villagers can access digital services in the cloud using their familiar countryside credentials. Just like that, the countryside becomes an integral part of our digital metropolis, united under the azure skyline.You can then piece by piece for each component create a complete city in the countryside (OnPrem) and in the cloud and illustrate their interaction. We could completely gamify this! SimCity anyone?

LimitationsSome general concepts are more difficult to put into a visual story as for example the Microsoft Cloud Adoption Framework:

What would be the Microsoft Cloud Adoption Framework for Azure in this city?

In our dynamic metropolis, the Microsoft Cloud Adoption Framework for Azure is our roadmap to success. Like a futuristic GPS, it guides us through the twists and turns of digital transformation. Envisioning helps us set our destination, planning ensures we choose the best route, and readiness prepares us for the journey ahead. Migration helps us seamlessly transition, while innovation propels us forward. With governance as our compass and optimization as our fuel, we navigate the ever-changing landscape, building a future where our digital city thrives amidst the azure skyline. Ready to embark on this transformative journey?I don’t find it fantastic as we recognize the empty blabbering of ChatGPT. We would need to be more specific to get something more interesting.

Wrap upYou can see how by relaxing from your studies, you can still continue to learn by having fun in an imaginary world. You could totally convert all this into visual notes that will help you when you renew your certifications. That is something I’m starting to explore.

This is just a glimpse of how you could use ChatGPT in your journey to learn Azure or anything else. Brainstorm any concept, service or component you are learning and see how it integrates into a visual story to get a high-level picture. Let me know if your are using ChatGPT that way for learning and what is the world you are building for it!

L’article Learning Azure by having fun with ChatGPT est apparu en premier sur dbi Blog.

Embedding Machine Learning Models in the Oracle Database: Create an ONNX model

This post is the first of a three-part series where I’m going to show you how to use pre-configured […]

The post Embedding Machine Learning Models in the Oracle Database: Create an ONNX model appeared first on DBASolved.

Local LLM RAG with Unstructured and LangChain [Structured JSON]

Freddie Starr Ate My File ! Finding out exactly what the Oracle Filewatcher is up to

As useful as they undoubtedly are, any use of a DBMS_SCHEDULER File Watchers in Oracle is likely to involve a number of moving parts.

This can make trying to track down issues feel a bit like being on a hamster wheel.

Fortunately, you can easily find out just exactly what the filewatcher is up to, if you know where to look …

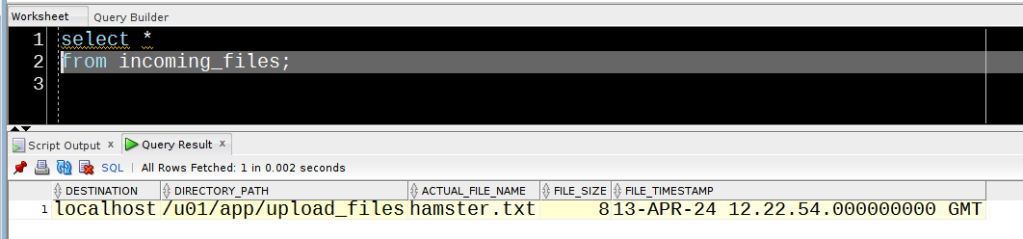

I’ve got a procedure to populate a table with details of any arriving file.

create table incoming_files(

destination VARCHAR2(4000),

directory_path VARCHAR2(4000),

actual_file_name VARCHAR2(4000),

file_size NUMBER,

file_timestamp TIMESTAMP WITH TIME ZONE)

/

create or replace procedure save_incoming_file( i_result sys.scheduler_filewatcher_result)

as

begin

insert into incoming_files(

destination,

directory_path,

actual_file_name,

file_size,

file_timestamp)

values(

i_result.destination,

i_result.directory_path,

i_result.actual_file_name,

i_result.file_size,

i_result.file_timestamp);

end;

/

The filewatcher and associated objects that will invoke this procedure are :

begin

dbms_credential.create_credential

(

credential_name => 'starr',

username => 'fstarr',

password => 'some-complex-password'

);

end;

/

begin

dbms_scheduler.create_file_watcher(

file_watcher_name => 'freddie',

directory_path => '/u01/app/upload_files',

file_name => '*.txt',

credential_name => 'starr',

enabled => false,

comments => 'Feeling peckish');

end;

/

begin

dbms_scheduler.create_program(

program_name => 'snack_prog',

program_type => 'stored_procedure',

program_action => 'save_incoming_file',

number_of_arguments => 1,

enabled => false);

-- need to make sure this program can see the message sent by the filewatcher...

dbms_scheduler.define_metadata_argument(

program_name => 'snack_prog',

metadata_attribute => 'event_message',

argument_position => 1);

-- Create a job that links the filewatcher to the program...

dbms_scheduler.create_job(

job_name => 'snack_job',

program_name => 'snack_prog',

event_condition => null,

queue_spec => 'freddie',

auto_drop => false,

enabled => false);

end;

/

The relevant components have been enabled :

begin

dbms_scheduler.enable('freddie');

dbms_scheduler.enable('snack_prog');

dbms_scheduler.enable('snack_job');

end;

/

… and – connected on the os as fstarr – I’ve dropped a file into the directory…

echo 'Squeak!' >/u01/app/upload_files/hamster.txtFile watchers are initiated by a scheduled run of the SYS FILE_WATCHER job.

The logging_level value determines whether or not the executions of this job will be available in the *_SCHEDULER_JOB_RUN_DETAILS views.

select program_name, schedule_name,

job_class, logging_level

from dba_scheduler_jobs

where owner = 'SYS'

and job_name = 'FILE_WATCHER'

/

PROGRAM_NAME SCHEDULE_NAME JOB_CLASS LOGGING_LEVEL

-------------------- ------------------------- ----------------------------------- ---------------

FILE_WATCHER_PROGRAM FILE_WATCHER_SCHEDULE SCHED$_LOG_ON_ERRORS_CLASS FULL

If the logging_level is set to OFF (which appears to be the default in 19c), you can enable it by connecting as SYSDBA and running :

begin

dbms_scheduler.set_attribute('FILE_WATCHER', 'logging_level', dbms_scheduler.logging_full);

end;

/

The job is assigned the FILE_WATCHER_SCHEDULE, which runs every 10 minutes by default. To check the current settings :

select repeat_interval

from dba_scheduler_schedules

where schedule_name = 'FILE_WATCHER_SCHEDULE'

/

REPEAT_INTERVAL

------------------------------

FREQ=MINUTELY;INTERVAL=10

The thing is, there are times when the SYS.FILE_WATCHER seems to slope off for a tea-break. So, if you’re wondering why your file has not been processed yet, it’s handy to be able to check if this job has run when you expected it to.

In this case, as logging is enabled, we can do just that :

select log_id, log_date, instance_id, req_start_date, actual_start_date

from dba_scheduler_job_run_details

where owner = 'SYS'

and job_name = 'FILE_WATCHER'

and log_date >= sysdate - (1/24)

order by log_date desc

/

LOG_ID LOG_DATE INSTANCE_ID REQ_START_DATE ACTUAL_START_DATE

------- ----------------------------------- ----------- ------------------------------------------ ------------------------------------------

1282 13-APR-24 14.50.47.326358000 +01:00 1 13-APR-24 14.50.47.000000000 EUROPE/LONDON 13-APR-24 14.50.47.091753000 EUROPE/LONDON

1274 13-APR-24 14.40.47.512172000 +01:00 1 13-APR-24 14.40.47.000000000 EUROPE/LONDON 13-APR-24 14.40.47.075846000 EUROPE/LONDON

1260 13-APR-24 14.30.47.301176000 +01:00 1 13-APR-24 14.30.47.000000000 EUROPE/LONDON 13-APR-24 14.30.47.048977000 EUROPE/LONDON

1248 13-APR-24 14.20.47.941210000 +01:00 1 13-APR-24 14.20.47.000000000 EUROPE/LONDON 13-APR-24 14.20.47.127769000 EUROPE/LONDON

1212 13-APR-24 14.10.48.480193000 +01:00 1 13-APR-24 14.10.47.000000000 EUROPE/LONDON 13-APR-24 14.10.47.153032000 EUROPE/LONDON

1172 13-APR-24 14.00.50.676270000 +01:00 1 13-APR-24 14.00.47.000000000 EUROPE/LONDON 13-APR-24 14.00.47.111936000 EUROPE/LONDON

6 rows selected.

Even if the SYS.FILE_WATCHER is not logging, when it does run, any files being watched for are added to a queue, the contents of which can be found in SCHEDULER_FILEWATCHER_QT.

This query will get you the really useful details of what your filewatcher has been up to :

select

t.step_no,

treat( t.user_data as sys.scheduler_filewatcher_result).actual_file_name as filename,

treat( t.user_data as sys.scheduler_filewatcher_result).file_size as file_size,

treat( t.user_data as sys.scheduler_filewatcher_result).file_timestamp as file_ts,

t.enq_time,

x.name as filewatcher,

x.requested_file_name as search_pattern,

x.credential_name as credential_name

from sys.scheduler_filewatcher_qt t,

table(t.user_data.matching_requests) x

where enq_time > trunc(sysdate)

order by enq_time

/

STEP_NO FILENAME FILE_SIZE FILE_TS ENQ_TIME FILEWATCHER SEARCH_PATTERN CREDENTIAL_NAME

---------- --------------- ---------- -------------------------------- ---------------------------- --------------- --------------- ---------------

0 hamster.txt 8 13-APR-24 12.06.58.000000000 GMT 13-APR-24 12.21.31.746338000 FREDDIE *.txt STARR

Happily, in this case, our furry friend has avoided the Grim Squaker…

NOTE – No hamsters were harmed in the writing of this post.

Oracle OEM Read Only Access

With great power comes great responsibility.

Reference: https://en.wikipedia.org/wiki/With_great_power_comes_great_responsibility

On boarding 4 Database Consultants and they have request access to OEM. Sharing SYSMAN password with every DBA is not a good idea and also difficult to determined who messed up.

Here are 2 articles and I favor Doc ID 2180307.1 based on last update and contains screenshots.

OEM 13c How To Create an Administrator with Read Only Access (Doc ID 2925232.1)

Enterprise Manager Base Platform – Version 13.4.0.0.0 and later

Last Update: Feb 1, 2023

EM 13c : How to Create an EM Administrator with Read Only Access to the Performance Pages of a Database Target? (Doc ID 2180307.1)

Enterprise Manager for Oracle Database – Version 13.1.1.0.0 and later

Last Update: May 9, 2023

Would have been nice to have emcli script to do this but beggars cannot be choosers.

Monitor Elasticsearch Cluster with Zabbix

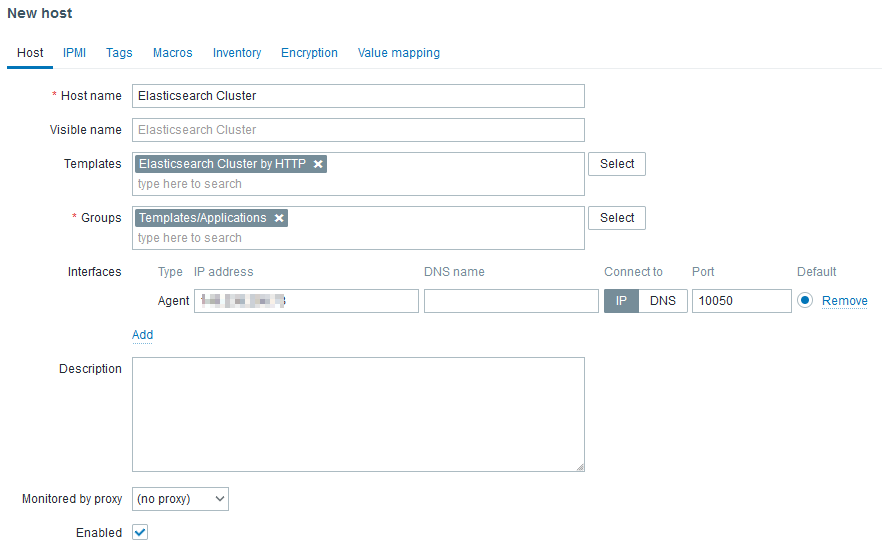

Setting up Zabbix monitoring over an Elasticsearch cluster is quiet easy as it does not require an agent install. As a matter a fact, the official template uses the Elastic REST API. Zabbix server itself will trigger these requests.

In this blog post, I will quick explain how to setup Elasticsearch cluster, then how easy the Zabbix setup is and list possible issues you might encounter.

Elastic Cluster SetupI will not go too much in detail as David covered already many topics around ELK. Anyway, would you need any help to install, tune or monitor your ELK cluster fell free to contact us.

My 3 virtual machines are provisioned with YaK on OCI. Then, I install the rpm on all 3 nodes.

After starting first node service, I am generating an enrollment token with this command:

/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -node

This return a long string which I will need to pass on node 2 and 3 of the cluster (before starting anything):

/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token <...>

Output will look like that:

This node will be reconfigured to join an existing cluster, using the enrollment token that you provided.

This operation will overwrite the existing configuration. Specifically:

- Security auto configuration will be removed from elasticsearch.yml

- The [certs] config directory will be removed

- Security auto configuration related secure settings will be removed from the elasticsearch.keystore

Do you want to continue with the reconfiguration process [y/N]

After confirming with a y, we are almost ready to start. First, we must update ES configuration file (ie. /etc/elasticsearch/elasticsearch.yml).

- Add IP of first node (only for first boot strapped) in

cluster.initial_master_nodes: ["10.0.0.x"] - Set listening IP of the inter-node trafic (to do on node 1 as well):

transport.host: 0.0.0.0 - Set list of master eligible nodes:

discovery.seed_hosts: ["10.0.0.x:9300"]

Now, we are ready to start node 2 and 3.

Let’s check the health of our cluster:

curl -s https://localhost:9200/_cluster/health -k -u elastic:password | jq

If you forgot elastic password, you can reset it with this command:

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic

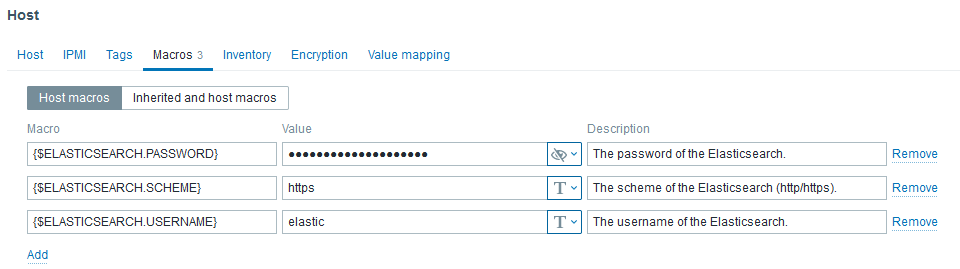

With latest Elasticsearch release, security has drastically increased as SSL communication became the standard. Nevertheless, default MACROS values of the template did not. Thus, we have to customize the followings:

- {$ELASTICSEARCH.USERNAME} to elastic

- {$ELASTICSEARCH.PASSWORD} to its password

- {$ELASTICSEARCH.SCHEME} to https

If SELinux is enabled on your Zabbix server, you will need to allow zabbix_server process to send network request. Following command achieves this:

setsebool zabbix_can_network 1

Next, we can create a host in Zabbix UI like that:

The Agent interface is required but will not be used to reach any agent as there are not agent based (passive or active) checks in the linked template. However, http checks uses HOST.CONN MACRO in the URLs. Ideally, the IP should be a virtual IP or a load balanced IP.

Don’t forget to set the MACROS:

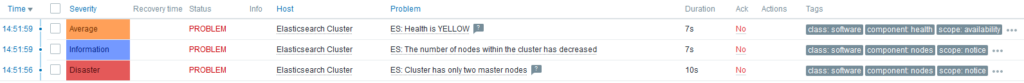

After few minutes, and once nodes discovery ran, you should see something like that:

What will happen if one node stops? On Problems tab of Zabbix UI:

After few seconds, I noticed that ES: Health is YELLOW gets resolved on its own. Why? Because shards are re-balanced across running servers.

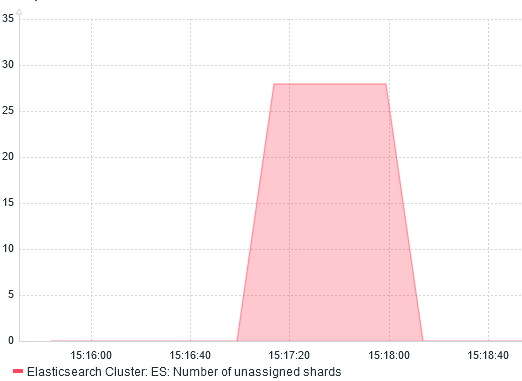

I confirm this by graphing Number of unassigned shards:

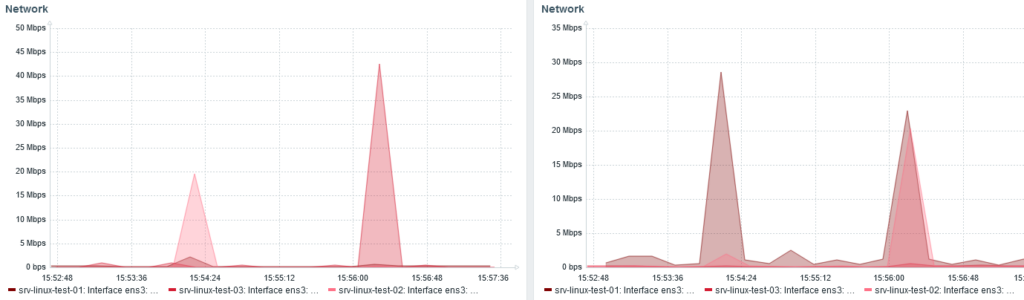

We can also see the re-balancing with the network traffic monitoring:

Received bytes on the left. Sent on the right.

Received bytes on the left. Sent on the right.

Around 15:24, I stopped node 3 and shards were redistributed from node 1 and 2.

When node 3 start, at 15:56, we can see node 1 and 2 (20 Mbps each) send back shards to node 3 (40 Mbps received).

ConclusionWhatever the monitoring tool you are using, it always help to understand what is happening behind the scene.

L’article Monitor Elasticsearch Cluster with Zabbix est apparu en premier sur dbi Blog.

Power BI Report Server: unable to publish a PBIX report

I installed a complete new Power BI Report Server. The server had several network interfaces to be part of several subdomains. In order to access the Power BI Report Server web portal from the different subdomains I defined 3 different HTTPS URL’s in the configuration file and a certificate binding. I used as well a specific active directory service account to start the service. I restarted my Power BI Report Server service checking that the URL reservations were done correctly. I knew that in the past this part could be a source of problems.

Everything seemed to be OK. I tested the accessibility to the Power BI Report Server web portal from the different sub-nets clients and everything was fine.

The next test was the upload of a Power BI report to the web portal. Of course I was sure, having a reports developed with Power BI Desktop RS.

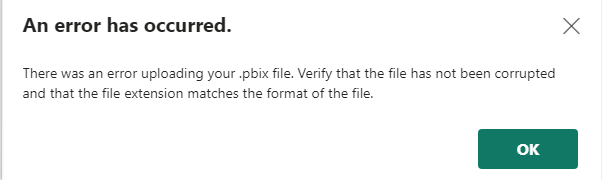

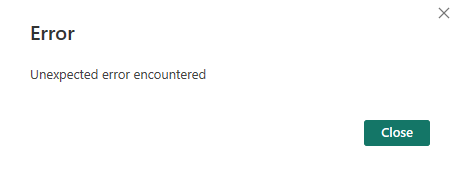

Error raisedAn error was raised when uploading a Power BI report in the web portal.

Trying to publish the report from Power BI Desktop RS was failing as well.

Troubleshouting

Troubleshouting

Report Server log analysis:

I started to analyze the Power BI Report Server logs. For a standard installation they are located in

C:\Program Files\Microsoft Power BI Report Server\PBIRS\LogFiles

In the last RSPowerBI_yyyy_mm_dd_hh_mi_ss.log file written I could find the following error:

Could not start PBIXSystem.Reflection.TargetInvocationException: Exception has been thrown by the target of an invocation. ---> System.Net.HttpListenerException: Access is denied

The error showing an Access denied, the first reaction was to put the service account I used to start the Power BI Report Server in the local Administrators group.

I restarted the service and tried again the publishing of the Power BI report. It worked without issue.

Well, I had a solution, but the it wasn’t an acceptable one. A application service account should not be local admin of a server, it would be a security breach and is not permitted by the security governance.

Based on the information contained in the error message, I could find that is was related to URL reservation, but from the configuration steps, I could not notice any issues.

I analyzed than the list of the reserved URL on the server. Run the following command with elevated permissions to get the list of URL reservation on the server:

Netsh http show urlacl

List of URL reservation found for the user NT SERVICE\PowerBIReportServer:

Reserved URL : http://+:8083/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub1.domain.com:443/ReportServerPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub2.domain.com:443/ReportServerPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://servername.domain.com.ch:443/PowerBI/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://servername.domain.com.ch:443/wopi/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub1.domain.com:443/ReportsPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub2.domain.com:443/ReportsPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://servername.domain.com.ch:443/ReportsPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Checking the list I could find:

- the 3 URL’s reserved fro the web service containing the virtual directory I defined ReportServerPBIRS

- the 3 URL’s reserved fro the web portal containing the virtual directory I defined ReportsPBIRS

But I noticed that only 1 URL was reserved for the virtual directories PowerBI and wopi containing the servername.

The 2 others with the subdomains were missing.

SolutionI decided to reserve the URL for PowerBI and wopi virtual directory on the 2 subdomains running the following command with elevated permissions.

Be sure that the SDDL ID used is the one you find in the rsreportserver.config file.

netsh http add urlacl URL=sub1.domain.com:443/PowerBI/ user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

netsh http add urlacl URL=sub2.domain.com:443/PowerBI/ user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

netsh http add urlacl URL=sub1.domain.com:443/wopi/ user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

netsh http add urlacl URL=sub2.domain.com:443/wopi// user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

Restart the Power BI Report Server service

You can notice that the error in the latest RSPowerBI_yyyy_mm_dd_hh_mi_ss.log file desappeared.

I tested the publishing of a Power BI report again, and it worked.

I hope that this reading has helped to solve your problem.

L’article Power BI Report Server: unable to publish a PBIX report est apparu en premier sur dbi Blog.

Configuring Shared Global Area (SGA) in a Multitenant Database with a PeopleSoft Pluggable Database (PDB)

I have been working on a PeopleSoft Financials application that we have converted from a stand-alone database to be the only pluggable database (PDB) in an Oracle 19c container database (CDB). We have been getting shared pool errors in the PDB that lead to ORA-4031 errors in the PeopleSoft application.

I have written a longer version of this article on my Oracle blog, but here are the main points.

SGA Management with a Parse Intensive System (PeopleSoft).PeopleSoft systems dynamically generate lots of non-shareable SQL code. This leads to lots of parse and consumes more shared pool. ASMM can respond by shrinking the buffer cache and growing the shared pool. However, this can lead to more physical I/O and degrade performance and it is not beneficial for the database to cache dynamic SQL statements that are not going to be executed again. Other parse-intensive systems can also exhibit this behaviour.

In PeopleSoft, I normally set DB_CACHE_SIZE and SHARED_POOL_SIZE to minimum values to stop ASMM shuffling too far in either direction. With a large SGA, moving memory between these pools can become a performance problem in its own right.

We removed SHARED_POOL_SIZE, DB_CACHE_SIZE and SGA_MIN_SIZE settings from the PDB. The only SGA parameters set at PDB level are SGA_TARGET and INMEMORY_SIZE.

SHARED_POOL_SIZE and DB_CACHE_SIZE are set as I usually would for PeopleSoft, but at CDB level to guarantee a minimum buffer cache size.

This is straightforward when there is only one PDB in the CDB. I have yet to see what happens when I have another active PDB with a non-PeopleSoft system and a different kind of workload that puts less stress on the shared pool and more on the buffer cache.

Initialisation Parameters- SGA_TARGET "specifies the total size of all SGA components". Use this parameter to control the memory usage of each PDB. The setting at CDB must be at least the sum of the settings for each PDB.

- Recommendations:

- Use only this parameter at PDB level to manage the memory consumption of the PDB.

- In a CDB with only a single PDB, set SGA_TARGET to the same value at CDB and PDB levels.

- Therefore, where there are multiple PDBs, SGA_TARGET at CDB level should be set to the sum of the setting for each PDB. However, I haven't tested this yet.

- There is no recommendation to reserve SGA for use by the CDB only, nor in my experience is there any need so to do.

- SHARED_POOL_SIZE sets the minimum amount of shared memory reserved to the shared pool. It can optionally be set in a PDB.

- Recommendation: However, do not set SHARED_POOL_SIZE at PDB level. It can be set at CDB level.

- DB_CACHE_SIZE sets the minimum amount of shared memory reserved to the buffer cache. It can optionally be set in a PDB.

- Recommendation: However, do not set DB_CACHE_SIZE at PDB level. It can be set at CDB level.

- SGA_MIN_SIZE has no effect at CDB level. It can be set at PDB level at up to half of the manageable SGA

- Recommendation: However, do not set SGA_MIN_SIZE.

- INMEMORY_SIZE: If you are using in-memory query, this must be set at CDB level in order to reserve memory for the in-memory store. The parameter defaults to 0, in which case in-memory query is not available. The in-memory pool is not managed by Automatic Shared Memory Management (ASMM), but it does count toward the total SGA used in SGA_TARGET.

- Recommendation: Therefore it must also be set in the PDB where in-memory is being used, otherwise we found(contrary to the documetntation) that the parameter defaults to 0, and in-memory query will be disabled in that PDB.

- A-04031 on Multitenant Database with Excessive Amounts of KGLH0 and / or SQLA Memory and Parameter SHARED_POOL_SIZE or SGA_MIN_SIZE Set at the PDB Level (Doc ID 2590172.1) – December 2022, Updated April 2023

- This one says “Remove the PDB-level SHARED_POOL_SIZE and/or SGA_MIN_SIZE initialization parameters. The only SGA memory sizing parameter that Oracle recommends setting at the PDB level is SGA_TARGET.”

- About memory configuration parameter on each PDBs (Doc ID 2655314.1) – Nov 2023

- “As a best practice, please do not to set SHARED_POOL_SIZE and DB_CACHE_SIZE on each PDBs and please manage automatically by setting SGA_TARGET.”

- "This best practice is confirmed by development in Bug 30692720"

- Bug 30692720 discusses how the parameters are validated. Eg. "Sum(PDB sga size) > CDB sga size"

- Bug 34079542: "Unset sga_min_size parameter in PDB."

Zero-Sum Game

Zero-sum is a situation, often cited in game theory, in which one person’s gain is equivalent to another’s loss, so the net change in wealth or benefit is zero.

A zero-sum game may have as few as two players or as many as millions of participants.

Reference: Zero-Sum Game Definition in Finance, With Example

This is what the database team is facing because system team is upgrading operating system to Red Hat Enterprise Linux 7 which will reach its end of life on June 30, 2024 (2 months later).

Here are some Oracle Docs with may be helpful.

IMPORTANT: Need to relink GI and DB

There is one step missing and do you know what it is? Keep in mind, not all environments are the same.

How To Relink The Oracle Grid Infrastructure Standalone (Restart) Installation Or

Oracle Grid InfrastructureRAC/ClusterInstallation (11.2 to 21c).

Doc ID 1536057.1

Relinking Oracle Home FAQ ( Frequently Asked Questions)

Doc ID 1467060.1

Executing “relink all” resets permission of extjob, jssu, oradism, externaljob.ora

Oracle Database – Enterprise Edition – Version 10.2.0.3 and later

Doc ID 1555453.1

PostgreSQL 17: pg_buffercache_evict()

In PostgreSQL up to version 16, there is no way to evict the buffer cache except by restarting the instance. In Oracle you can do that since ages with “alter system flush buffer cache“, but not in PostgreSQL. This will change when PostgreSQL 17 will be released later this year. Of course, flushing the buffer cache is nothing you’d usually like to do in production, but this can be very handy for educational or debugging purposes. This is also the reason why this is intended to be a developer feature.

For getting access to the pg_buffercache_evict function you need to install the pg_buffercache extension as the function is designed to work over the pg_buffercache view:

postgres=# select version();

version

-------------------------------------------------------------------

PostgreSQL 17devel on x86_64-linux, compiled by gcc-7.5.0, 64-bit

(1 row)

postgres=# create extension pg_buffercache;

CREATE EXTENSION

postgres=# \dx

List of installed extensions

Name | Version | Schema | Description

----------------+---------+------------+---------------------------------

pg_buffercache | 1.5 | public | examine the shared buffer cache

plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language

(2 rows)

postgres=# \d pg_buffercache

View "public.pg_buffercache"

Column | Type | Collation | Nullable | Default

------------------+----------+-----------+----------+---------

bufferid | integer | | |

relfilenode | oid | | |

reltablespace | oid | | |

reldatabase | oid | | |

relforknumber | smallint | | |

relblocknumber | bigint | | |

isdirty | boolean | | |

usagecount | smallint | | |

pinning_backends | integer | | |

Once the extension is in place, the function is there as well:

postgres=# \dfS *evict*

List of functions

Schema | Name | Result data type | Argument data types | Type

--------+----------------------+------------------+---------------------+------

public | pg_buffercache_evict | boolean | integer | func

(1 row)

To load something into the buffer cache we’ll make use of the pre_warm extension and completely load the table we’ll create afterwards:

postgres=# create extension pg_prewarm;

CREATE EXTENSION

postgres=# create table t ( a int, b text );

CREATE TABLE

postgres=# insert into t select i, i::text from generate_series(1,10000) i;

INSERT 0 10000

postgres=# select pg_prewarm ( 't', 'buffer', 'main', null, null );

pg_prewarm

------------

54

(1 row)

postgres=# select pg_relation_filepath('t');

pg_relation_filepath

----------------------

base/5/16401

(1 row)

postgres=# select count(*) from pg_buffercache where relfilenode = 16401;

count

-------

58

(1 row)

If you wonder why there are 58 blocks cached in the buffer cache but we only loaded 54, this is because of the visibility and free space map:

postgres=# select relforknumber from pg_buffercache where relfilenode = 16401 and relforknumber != 0;

relforknumber

---------------

1

1

1

2

(4 rows)

Using the new pg_buffercache_evict() function we are now able to completely evict the buffers of that table from the cache, which results in exactly 58 blocks to be evicted:

postgres=# select pg_buffercache_evict(bufferid) from pg_buffercache where relfilenode = 16401;

pg_buffercache_evict

----------------------

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

(58 rows)

Cross-checking this confirms, that all the blocks are gone:

postgres=# select count(*) from pg_buffercache where relfilenode = 16401;

count

-------

0

(1 row)

Nice, thanks to all involved.

L’article PostgreSQL 17: pg_buffercache_evict() est apparu en premier sur dbi Blog.

Video on Client Side Failover in RAC

Catastrophic Database Failure -- Deletion of Control and Redo Files

AWR Snap ID

What to do when you hit a problem (possibly after an incomplete recovery) that reports an “ORA-00001 unique key violation” on sys.wrm$_snapshot_pk – as reported recently in this thread on the MOSC SQL Performance forum (needs a MOS account.)

Snapshot ids are carefully sequenced, without gaps, so somehow the thing that controls the “current” sequence number has gone backwards and is trying to generate a value that is lower than the current highest value in wrm$_snapshot. The thread I referenced above does point to an article dated 2017 on Alibaba discussing methods of checking for corruption and clearing up messes; but as an extra option you could simply try hacking the control table to set the “last used” snapshot id so something higher than the highest value currently in wrm$_snapshot. The table you need to hack is wrm$_wr_control and here’s an example of its contents from an instance of 19.11 (preceded by a check of the current maximum snap_id in wrm$_snapshot):

SQL> select max(snap_id) max_snap_id, max(end_interval_time) max_snap_time from wrm$_snapshot;

MAX_SNAP_ID MAX_SNAP_TIME

----------- ---------------------------------------------------------------------------

7304 09-APR-24 07.00.14.180 PM

SQL> execute print_table('select * from wrm$_wr_control')

DBID : 3158514872

SNAP_INTERVAL : +00000 01:00:00.0

SNAPINT_NUM : 3600

RETENTION : +00008 00:00:00.0

RETENTION_NUM : 691200

MOST_RECENT_SNAP_ID : 7304

MOST_RECENT_SNAP_TIME : 09-APR-24 07.00.15.169 PM

MRCT_SNAP_TIME_NUM : 1712685600

STATUS_FLAG : 2

MOST_RECENT_PURGE_TIME : 09-APR-24 08.35.57.430 AM

MRCT_PURGE_TIME_NUM : 1712648156

MOST_RECENT_SPLIT_ID : 7295

MOST_RECENT_SPLIT_TIME : 1712648156

SWRF_VERSION : 30

REGISTRATION_STATUS : 0

MRCT_BASELINE_ID : 0

TOPNSQL : 2000000000

MRCT_BLTMPL_ID : 0

SRC_DBID : 3158514872

SRC_DBNAME : CDB$ROOT

T2S_DBLINK :

FLUSH_TYPE : 0

SNAP_ALIGN : 0

MRCT_SNAP_STEP_TM : 1712685613

MRCT_SNAP_STEP_ID : 0

TABLESPACE_NAME : SYSAUX

-----------------

1 row(s) selected

PL/SQL procedure successfully completed.

I have to state that I would not try this on a production system without getting permission – possibly written in blood – from Oracle support: but if I were really desperate to keep existing snapshots and to make it possible for Oracle to generate new snapshots as soon as possible I might try updating the most_recent_snap_id to the value shown in wrm$_snapshot.

Of course you ought to update the most_recent_snap_time as well, and the mrct_snap_time_num (which looks like the number of seconds since 1st Jan 1900 GMT (on my instance)).

Then there’s the interpretation and sanity checking of the other “most recent / mrct” columns to worry about, and the possibility of PDBs vs. CDBs – but those are topics that I’m going to leave to someone else to worry about.

Is 'SELECT * FROM :TABLE_NAME;' available?

Apache httpd Tuning and Monitoring with Zabbix

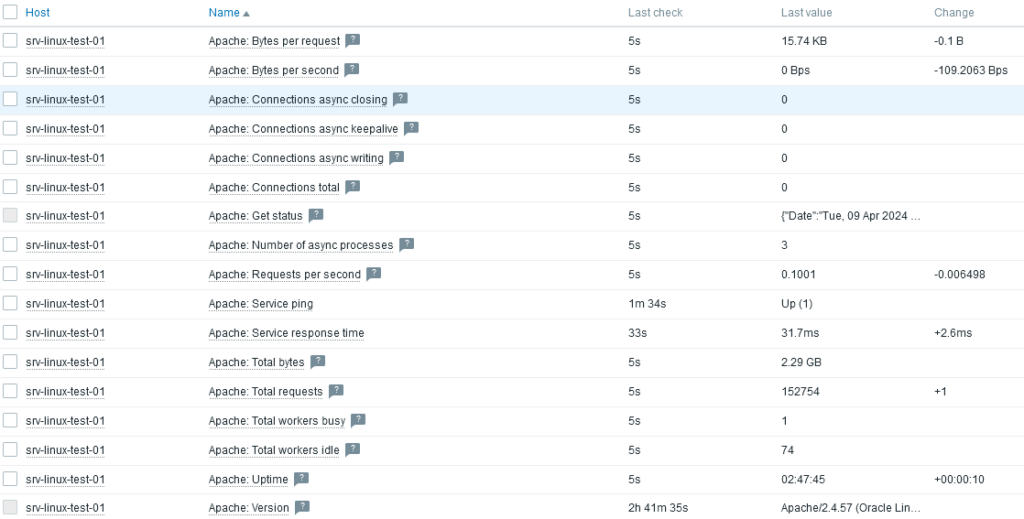

There is no tuning possible without a proper monitoring in place to measure the impact of any changes. Thus, before trying to tune an Apache httpd server, I will explain how to monitor it with Zabbix.

Setup Zabbix MonitoringApache httpd template provided by Zabbix uses mod_status which provides metrics about load, processes and connections.

Before enabling this module, we must ensure it is present. httpd -M 2>/dev/null | grep status_module command will tell you so. Next, we can extend configuration by creating a file in /etc/httpd/conf.d:

<Location "/server-status">

SetHandler server-status

</Location>After a configuration reload, we should be able to access the URL http://<IP>/server-status?auto.

Finally, we can link the template to the host and see that data are collected:

Tuning

Tuning

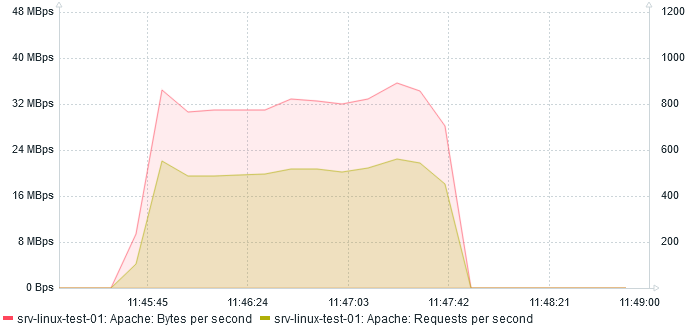

I deployed a simple static web site to the Apache httpd server. To load test that web site, nothing better than JMeter. The load test scenario is simply requesting Home, About, Services and Contact Us pages and retrieve all embedded resources during 2 minutes with 100 threads (ie. users).

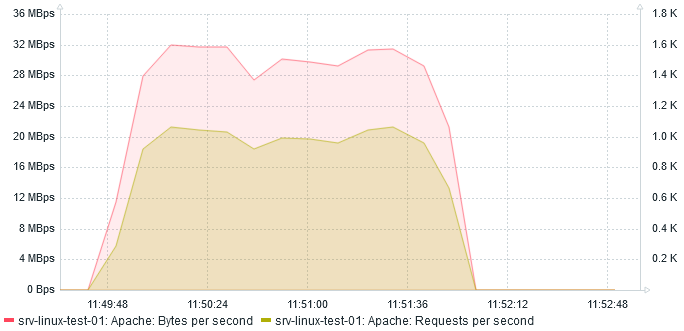

Here are the performances on requests per seconds (right scale) and bytes per seconds (left scale):

At most, server serves 560 req/s at 35 MBps.

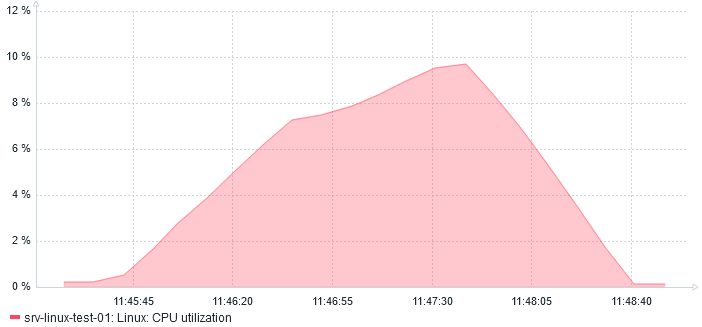

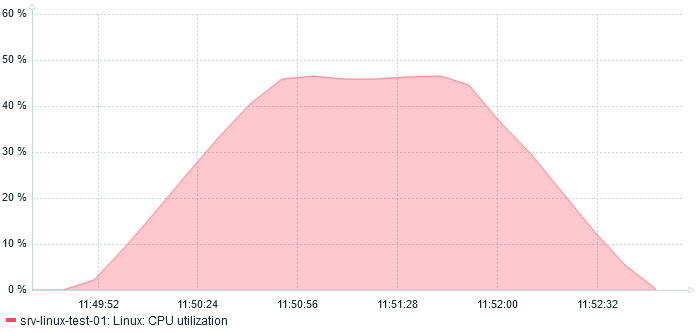

And regarding CPU usage, it almost reaches 10%:

Compression

Compression

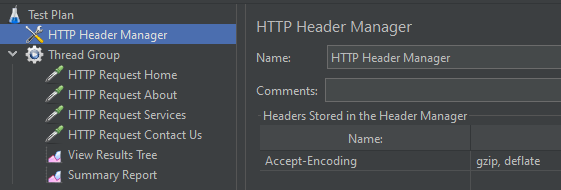

Without any additional headers, Apache httpd will consider the client (here JMeter) does not support gzip. Fortunately, it is possible to set HTTP Header in JMeter. I add it at the top of the test plan so that it will apply to all HTTP Requests below:

Note that I enabled mod_deflate on Apache side.

Let’s run another load test and compare the results!

After two minutes, here is what I see:

The amount of Mbps reduced to 32 which is expected as we are compressing. The amount of req/s increased by almost 100% to 1000 req/s !

On the CPU side, we also see a huge increase:

45% CPU usage

45% CPU usage

This is also more or else expected as compression requires computing.

And NowThe deployed static web site does not have any forms which would require client side compression. That will be a subject for another blog. Also, I can compare with Nginx.

L’article Apache httpd Tuning and Monitoring with Zabbix est apparu en premier sur dbi Blog.

ODA X10-L storage configuration is different from what you may expect

Oracle Database Appliance X10 lineup is available since September 2023. Compared to X9-2 lineup, biggest changes are the AMD Epyc processors replacing Intel Xeons, and new license model regarding Standard Edition 2, clarified by Oracle several weeks ago. Apart from these new things, the models are rather similar to previous ones, with the Small model for basic needs, a HA model with RAC and high-capacity storage for big critical databases, and a much more popular Large model for most of the needs.

2 kinds of disks inside the ODA X10-LThe ODA I’ve worked on is a X10-L with 2x disk expansions, meaning that I have the 2x 6.8TB disks from the base configuration, plus 4x 6.8TB additional disks. The first 4 disks are classic disks visible on the front panel of the ODA. As there are only 4 bays in the front, the other disks are internal, called AIC for Add-In Card (PCIe). You can have up to 3 disk expansions, meaning 4x disks in the front and 4x AIC disks inside the server. You should know that only the front disks are hot swappable. The other disks being PCIe cards, you will need to shut down the server and open its cover to remove, add or replace a disk. 6.8TB is the RAW capacity, consider that real capacity is something like 6.2TB, but usable capacity will be lower as you will need to use ASM redundancy to protect your blocks. In the ODA documentation, you will find the usable capacity for each disk configuration.

2 AIC disks inside an ODA X10-L. The first 4 disks are in the front.

First contact with X10-L – using odacliodacli describe-system is very useful for an overview of the ODA you’re connected to:

odacli describe-system

Appliance Information

----------------------------------------------------------------

ID: 3fcd1093-ea74-4f41-baa1-f325b469a3e1

Platform: X10-2L

Data Disk Count: 10

CPU Core Count: 4

Created: January 10, 2024 2:26:43 PM CET

System Information

----------------------------------------------------------------

Name: dc1oda002

Domain Name: ad.dbiblogs.ch

Time Zone: Europe/Zurich

DB Edition: EE

DNS Servers: 10.100.50.8 10.100.50.9

NTP Servers: 10.100.50.8 10.100.50.9

Disk Group Information

----------------------------------------------------------------

DG Name Redundancy Percentage

------------------------- ------------------------- ------------

DATA NORMAL 85

RECO NORMAL 15Data Disk Count is not what I’ve expected. This is normally the number of DATA disks, it should be 6 on this ODA, not 10.

Let’s do a show disk with odaadmcli:

odaadmcli show disk

NAME PATH TYPE STATE STATE_DETAILS

pd_00 /dev/nvme0n1 NVD ONLINE Good

pd_01 /dev/nvme1n1 NVD ONLINE Good

pd_02 /dev/nvme3n1 NVD ONLINE Good

pd_03 /dev/nvme2n1 NVD ONLINE Good

pd_04_c1 /dev/nvme8n1 NVD ONLINE Good

pd_04_c2 /dev/nvme9n1 NVD ONLINE Good

pd_05_c1 /dev/nvme6n1 NVD ONLINE Good

pd_05_c2 /dev/nvme7n1 NVD ONLINE GoodOK, this command only displays the DATA disks, so the system disks are not in this list, but there are still 8 disks and not 6.

Let’s have a look on the system side.

First contact with X10-L – using system commandsWhat is detected by the OS?

lsblk | grep disk

nvme9n1 259:0 0 3.1T 0 disk

nvme6n1 259:6 0 3.1T 0 disk

nvme8n1 259:12 0 3.1T 0 disk

nvme7n1 259:18 0 3.1T 0 disk

nvme4n1 259:24 0 447.1G 0 disk

nvme5n1 259:25 0 447.1G 0 disk

nvme3n1 259:26 0 6.2T 0 disk

nvme0n1 259:27 0 6.2T 0 disk

nvme1n1 259:28 0 6.2T 0 disk

nvme2n1 259:29 0 6.2T 0 disk

asm/acfsclone-242 250:123905 0 150G 0 disk /opt/oracle/oak/pkgrepos/orapkgs/clones

asm/commonstore-242 250:123906 0 5G 0 disk /opt/oracle/dcs/commonstore

asm/odabase_n0-242 250:123907 0 40G 0 disk /u01/app/odaorabase0

asm/orahome_sh-242 250:123908 0 80G 0 disk /u01/app/odaorahome

This is rather strange. I can see 10 disks, the 2x 450GB disks are for the system (and normally not considered as DATA disks by odacli), I can also find 4x 6.2TB disks. But instead of having 2x additional 6.2TB disks, I have 4x 3.1TB disks. The overall capacity is OK, 37.2TB, but it’s different compared to previous ODA generations.

Let’s confirm this with fdisk:

fdisk -l /dev/nvme0n1

Disk /dev/nvme0n1: 6.2 TiB, 6801330364416 bytes, 13283848368 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: E20D9013-1982-4F66-B7A2-5FE0B1BC8F74

Device Start End Sectors Size Type

/dev/nvme0n1p1 4096 1328386047 1328381952 633.4G Linux filesystem

/dev/nvme0n1p2 1328386048 2656767999 1328381952 633.4G Linux filesystem

/dev/nvme0n1p3 2656768000 3985149951 1328381952 633.4G Linux filesystem

/dev/nvme0n1p4 3985149952 5313531903 1328381952 633.4G Linux filesystem

/dev/nvme0n1p5 5313531904 6641913855 1328381952 633.4G Linux filesystem

/dev/nvme0n1p6 6641913856 7970295807 1328381952 633.4G Linux filesystem

/dev/nvme0n1p7 7970295808 9298677759 1328381952 633.4G Linux filesystem

/dev/nvme0n1p8 9298677760 10627059711 1328381952 633.4G Linux filesystem

/dev/nvme0n1p9 10627059712 11955441663 1328381952 633.4G Linux filesystem

/dev/nvme0n1p10 11955441664 13283823615 1328381952 633.4G Linux filesystem

fdisk -l /dev/nvme8n1

Disk /dev/nvme8n1: 3.1 TiB, 3400670601216 bytes, 6641934768 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: A3086CB0-31EE-4F78-A6A6-47D53149FDAE

Device Start End Sectors Size Type

/dev/nvme8n1p1 4096 1328386047 1328381952 633.4G Linux filesystem

/dev/nvme8n1p2 1328386048 2656767999 1328381952 633.4G Linux filesystem

/dev/nvme8n1p3 2656768000 3985149951 1328381952 633.4G Linux filesystem

/dev/nvme8n1p4 3985149952 5313531903 1328381952 633.4G Linux filesystem

/dev/nvme8n1p5 5313531904 6641913855 1328381952 633.4G Linux filesystemOK, the 6.2TB disks are split in 10 partitions, and the 3.1TB disks are split in 5 partitions. It makes sense because ASM needs partitions of the same size inside a diskgroup.

First contact with X10-L – using ASMNow let’s have a look within ASM, the most important thing being that ASM is able to manage the storage correctly:

su - grid

sqlplus / as sysasm

select a.name "Diskgroup" , round(a.total_mb/1024) "Size GB", round(a.free_mb/1024) "Free GB", round(a.usable_file_mb/1024) "Usable GB", 100*round((a.total_mb-a.free_mb)/a.total_mb,1) "Use%" from v$asm_diskgroup a ;

Diskgroup Size GB Free GB Usable GB Use%

------------------------------ ---------- ---------- ---------- ----------

DATA 30404 12341 3637 60

RECO 7601 5672 2203 30

select name,total_mb/1024 "GB", GROUP_NUMBER from v$asm_disk order by 3 desc;

NAME GB GROUP_NUMBER

------------------------------ ---------- ------------

NVD_S02_S6UENA0W1072P9 633.421875 2

NVD_S02_S6UENA0W1072P10 633.421875 2

NVD_S05_C2_PHAZ25110P9 633.421875 2

NVD_S05_C2_PHAZ25110P10 633.421875 2

NVD_S03_S6UENA0W1073P10 633.421875 2

NVD_S00_S6UENA0W1075P10 633.421875 2

NVD_S01_S6UENA0W1072P10 633.421875 2

NVD_S00_S6UENA0W1075P9 633.421875 2

NVD_S01_S6UENA0W1072P9 633.421875 2

NVD_S04_C2_PHAZ24710P10 633.421875 2

NVD_S04_C2_PHAZ24710P9 633.421875 2

NVD_S03_S6UENA0W1073P9 633.421875 2

NVD_S05_C1_PHAZ25110P2 633.421875 1

NVD_S00_S6UENA0W1075P8 633.421875 1

NVD_S04_C2_PHAZ24710P6 633.421875 1

NVD_S00_S6UENA0W1075P6 633.421875 1

NVD_S02_S6UENA0W1072P1 633.421875 1

NVD_S05_C1_PHAZ25110P3 633.421875 1

NVD_S04_C1_PHAZ24710P2 633.421875 1

NVD_S03_S6UENA0W1073P4 633.421875 1

NVD_S00_S6UENA0W1075P2 633.421875 1

NVD_S02_S6UENA0W1072P6 633.421875 1

NVD_S05_C2_PHAZ25110P6 633.421875 1

NVD_S00_S6UENA0W1075P7 633.421875 1

NVD_S00_S6UENA0W1075P5 633.421875 1

NVD_S04_C2_PHAZ24710P7 633.421875 1

NVD_S04_C2_PHAZ24710P8 633.421875 1

NVD_S02_S6UENA0W1072P4 633.421875 1

NVD_S03_S6UENA0W1073P7 633.421875 1

NVD_S00_S6UENA0W1075P1 633.421875 1

NVD_S04_C1_PHAZ24710P1 633.421875 1

NVD_S01_S6UENA0W1072P2 633.421875 1

NVD_S01_S6UENA0W1072P1 633.421875 1

NVD_S01_S6UENA0W1072P3 633.421875 1

NVD_S03_S6UENA0W1073P5 633.421875 1

NVD_S01_S6UENA0W1072P4 633.421875 1

NVD_S02_S6UENA0W1072P3 633.421875 1

NVD_S01_S6UENA0W1072P7 633.421875 1

NVD_S02_S6UENA0W1072P7 633.421875 1

NVD_S05_C1_PHAZ25110P1 633.421875 1

NVD_S03_S6UENA0W1073P1 633.421875 1

NVD_S01_S6UENA0W1072P5 633.421875 1

NVD_S05_C1_PHAZ25110P5 633.421875 1

NVD_S04_C1_PHAZ24710P3 633.421875 1

NVD_S00_S6UENA0W1075P3 633.421875 1

NVD_S03_S6UENA0W1073P6 633.421875 1

NVD_S02_S6UENA0W1072P8 633.421875 1

NVD_S00_S6UENA0W1075P4 633.421875 1

NVD_S04_C1_PHAZ24710P5 633.421875 1

NVD_S01_S6UENA0W1072P8 633.421875 1

NVD_S04_C1_PHAZ24710P4 633.421875 1

NVD_S03_S6UENA0W1073P3 633.421875 1

NVD_S05_C1_PHAZ25110P4 633.421875 1

NVD_S03_S6UENA0W1073P2 633.421875 1

NVD_S01_S6UENA0W1072P6 633.421875 1

NVD_S05_C2_PHAZ25110P8 633.421875 1

NVD_S02_S6UENA0W1072P5 633.421875 1

NVD_S02_S6UENA0W1072P2 633.421875 1

NVD_S03_S6UENA0W1073P8 633.421875 1

NVD_S05_C2_PHAZ25110P7 633.421875 1This is also different from older generations. On X9-2 lineup, the RECO diskgroup (group 2) has as many partitions as diskgroup DATA (group 1). This is no more the way it works. But regarding the free and usable GB, everything is fine.

Why Oracle did differently from older ODAs?Oldest ODAs were using spinning disks, and for maximizing performance, Oracle created 2 partitions on each disk: one for DATA on the external part of the disk, and one for RECO on the internal part of the disk. All the DATA partitions were then added to the DATA diskgroup, and all RECO partitions to the RECO diskgroup. The more disks you had, the faster the read and write speed was. Redologs had their own dedicated disks, usually 4 small SSDs using high redundancy.

Nowadays, ODAs are mostly using SSDs, and read and write speed is identical wherever the block is. And the number of disks doesn’t matter, speed is mostly limited by the PCIe bandwidth and chips on the SSDs, but as far as I know, the speed of one NVMe SSD is enough for 95% of the databases.

Internal AIC disks on ODA X10-L are split in two disks for some reasons, so it’s not possible anymore to have the big partitions we had before.

ConclusionThis X10-L was initially deployed using version 19.21. As it wasn’t already in use, and to make sure everything is fine on the hardware and software side, it was decided to do a fresh reimaging using latest 19.22. It didn’t change anything, odacli still sees 10 disks, but apart from that, everything is fine.

Disk size and partitioning is now different, but it won’t change anything for most of us.

L’article ODA X10-L storage configuration is different from what you may expect est apparu en premier sur dbi Blog.

PostgreSQL 17: Split and Merge partitions

Since declarative partitioning was introduced in PostgreSQL 10 there have been several additions and enhancements throughout the PostgreSQL releases. PostgreSQL 17, expected to be released around September/October this year, is no exception to that and will come with two new features when it comes to partitioning: Splitting and Merging partitions.

Before we can have a look at that, we need a partitioned table, some partitions and some data, so lets generate this. Splitting and Merging works for range and list partitioning and because most of the examples for partitioning you can find online go for range partitioning, we’ll go for list partitioning in this post:

postgres=# create table t ( a int, b text ) partition by list (b);

CREATE TABLE

postgres=# \d+ t

Partitioned table "public.t"

Column | Type | Collation | Nullable | Default | Storage | Compression | Stats target | Description

--------+---------+-----------+----------+---------+----------+-------------+--------------+-------------

a | integer | | | | plain | | |

b | text | | | | extended | | |

Partition key: LIST (b)

Number of partitions: 0

postgres=# create table t_p1 partition of t for values in ('a');

CREATE TABLE

postgres=# create table t_p2 partition of t for values in ('b');

CREATE TABLE

postgres=# create table t_p3 partition of t for values in ('c');

CREATE TABLE

postgres=# create table t_p4 partition of t for values in ('d');

CREATE TABLE

postgres=# \d+ t

Partitioned table "public.t"

Column | Type | Collation | Nullable | Default | Storage | Compression | Stats target | Description

--------+---------+-----------+----------+---------+----------+-------------+--------------+-------------

a | integer | | | | plain | | |

b | text | | | | extended | | |

Partition key: LIST (b)

Partitions: t_p1 FOR VALUES IN ('a'),

t_p2 FOR VALUES IN ('b'),

t_p3 FOR VALUES IN ('c'),

t_p4 FOR VALUES IN ('d')

This gives us a simple list partitioned table and four partitions. Lets add some data to the partitions:

postgres=# insert into t select i, 'a' from generate_series(1,100) i;

INSERT 0 100

postgres=# insert into t select i, 'b' from generate_series(101,200) i;

INSERT 0 100

postgres=# insert into t select i, 'c' from generate_series(201,300) i;

INSERT 0 100

postgres=# insert into t select i, 'd' from generate_series(301,400) i;

INSERT 0 100

postgres=# select count(*) from t_p1;

count

-------

100

(1 row)

postgres=# select count(*) from t_p2;

count

-------

100

(1 row)

postgres=# select count(*) from t_p3;

count

-------

100

(1 row)

postgres=# select count(*) from t_p4;

count

-------

100

(1 row)

Suppose we want to merge the first two partitions, containing values of ‘a’ and ‘b’. This can now be easily done with the new merge partition DDL command:

postgres=# alter table t merge partitions (t_p1, t_p2) into t_p12;

ALTER TABLE

postgres=# \d+ t

Partitioned table "public.t"

Column | Type | Collation | Nullable | Default | Storage | Compression | Stats target | Description

--------+---------+-----------+----------+---------+----------+-------------+--------------+-------------

a | integer | | | | plain | | |

b | text | | | | extended | | |

Partition key: LIST (b)

Partitions: t_p12 FOR VALUES IN ('a', 'b'),

t_p3 FOR VALUES IN ('c'),

t_p4 FOR VALUES IN ('d')

The same the other way around: Splitting the new combined partition into single partitions:

postgres=# alter table t split partition t_p12 into ( partition t_p1 for values in ('a'), partition t_p2 for values in ('b'));

ALTER TABLE

postgres=# \d+ t

Partitioned table "public.t"

Column | Type | Collation | Nullable | Default | Storage | Comp>

--------+---------+-----------+----------+---------+----------+----->

a | integer | | | | plain | >

b | text | | | | extended | >

Partition key: LIST (b)

Partitions: t_p1 FOR VALUES IN ('a'),

t_p2 FOR VALUES IN ('b'),

t_p3 FOR VALUES IN ('c'),

t_p4 FOR VALUES IN ('d')

Nice, but there currently is a downside with this: Both operations will take an “ACCESS EXCLUSIVE LOCK” on the parent table, so everything against that table will be blocked for the time it takes to either split or merge the partitions. I am not to worried about that, as this was the same with other features related to partitioning in the past. Over time, locking was reduced and I guess this will be the same with this feature.

L’article PostgreSQL 17: Split and Merge partitions est apparu en premier sur dbi Blog.